The Shape of Data

Making full use of telemetry data is a critical but challenging part of the job. You’ve collected it, so now what? You need to derive value from that data in some way—a bunch of data points sitting around in a time series database isn’t very interesting on its own. Observability is a process of data collection but also data analysis. What story is the data telling you? In some ways, observability really is a lot like story telling, and much of that story is told in shapes.

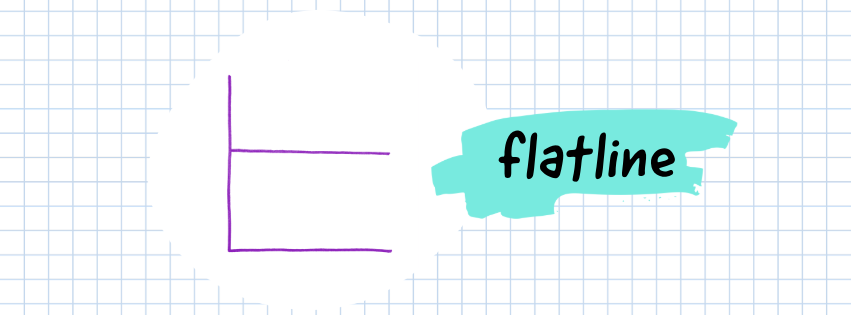

Ahhh, the stability of a flat line. Whether this signal is concerning is highly context-dependent. But often, this is the kind of graph us SREs dream of…no change. It can also represent a very slowly changing value, depending on the time scale the graph reflects. For example, disk space utilization will often appear as a more-or-less flat line as it changes at a comparatively slow rate.

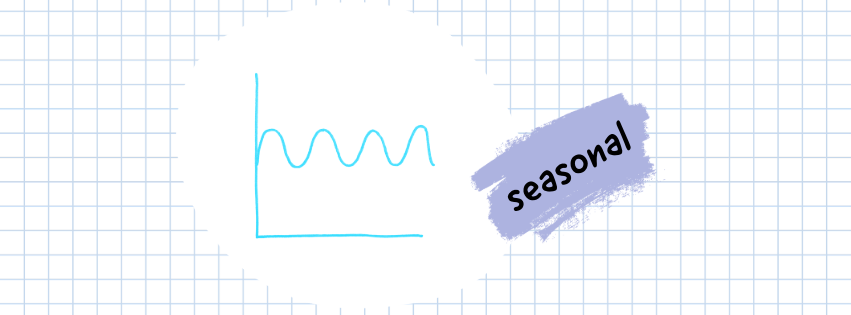

These make me think of waves, of the sea. Seasonality in data will appear as crests and troughs repeating in a rhythmic, wave-like pattern. Its shape is heavily affected by the time scale of the graph—a flatline at one time scale can turn into a seasonal graph at another. Seasonality is often driven by user-behavior that is primarily dictated based on factors like when we’re awake and likely to be using your service. Over longer time frames, these graphs might even reflect usage patterns related to holidays and social events like “back-to-school” periods. Think of when these times are relevant for your application or service.

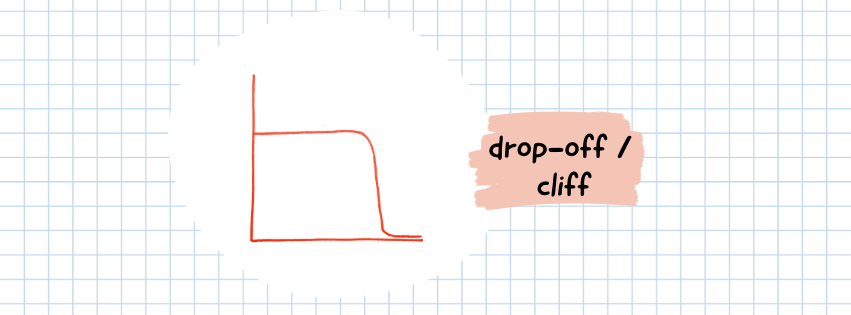

Definitely not good. Or very good. Which is it?

A cliff in a graph tells a very polarizing story, it’s either a good thing or a bad thing. Perhaps you just fixed a bug during an ongoing incident and this is a graph of errors that just plummeted. Yay—you’re done! Otherwise, if this is the traffic before and after, your day starts here ☹️. If you’re lucky enough to find one, these graphs provide a good indication of when an issue began or resolved, and may serve as the entry point to your investigation.

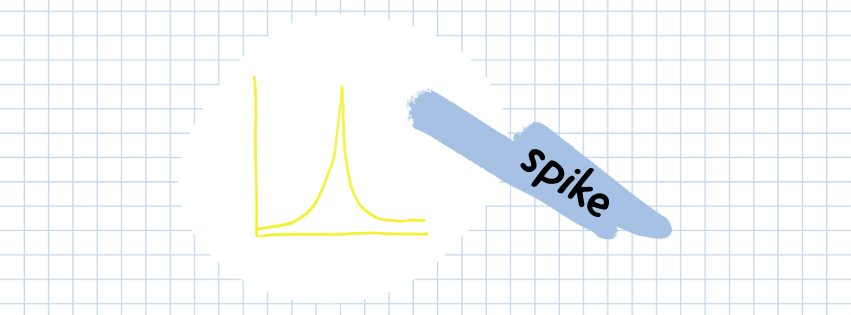

Like a bolt of lightning—sudden, extreme, brief—these events are typically over by the time you’re aware of them. Monitors based on spike-y data can often be noisy, as they easily trip monitoring thresholds without underlying conditions persisting long enough to truly merit operator attention. If rare, it might be safe to disregard the occasional spike as an anomaly. However, they can be a sign of a larger problem if they occur frequently, in a seasonal pattern, or sustain peak values for a meaningful duration. Monitor thresholds can be tuned accordingly.

This graph can’t make up its mind and vacillates between (approximately) two values resulting in a distinctive sawtooth-like pattern. A sawtooth pattern can sometimes be indicative of a process stuck in a restart loop (flapping) or other anomalous application behavior. Other times these can reflect configuration errors in the tools used to collect the telemetry data itself that results in unnatural usage patterns like these. Are any of your collectors under- or over-reporting data?

In all of these cases, magnitude, time scale, and your domain knowledge are essential to painting the full picture. So, take that graph and zoom out, zoom in, play around with it a little bit. What story do you see?