What is Observability?

Several of my past engineering roles have been focused on the field of “observability” and this is inevitably one of the first questions that comes up when I talk about my career. And not just with people like my friends and in-laws, but for people that I work with too. Often lumped in as one of many Site Reliability Engineering (SRE) or DevOps team responsibilities, even other industry professionals who are familiar with observability-related tasks and tooling might not fully grok what this misunderstood domain is really all about. And it’s not surprising why – the Wikipedia introduction that many are exposed to when first learning about the topic is terrible without context, an obtuse definition deriving from 1960s control theory, stating rather dryly:

Observability is a measure of how well the internal state of a system can be inferred from knowledge of its external outputs.

A clinical description that, while technically correct, feels lacking in substance, leaving itself open to a broader interpretation of its true meaning. Every observability practitioner likely has their own mental model of what observability is conceptually and how it should be applied as a field of practice within software engineering. So, how to answer such a seemingly mundane query?

Allow me to offer an alternative:

Observability provides an external perspective into a system’s internal operational state by analyzing specific, measurable outputs using specialized data, tools, and skills.

Let’s unpack this definition.

A “system” can encompass different scopes, from an entire Kubernetes cluster to a single application or even the SDLC itself. There is no requirement that a system be a purely technical construct; observability concepts and techniques can even be applied to systems of process, so long as there are meaningful, quantitative outputs from which we can derive functional insight. Even the human body is a system of almost innumerable externally-quantifiable outputs – like heart-rate or temperature.

In many ways, observability is not so different from other data-related pursuits: it is about asking questions and providing the means by which to find answers. Observability feeds curiosity. Good observability can even reveal the unknown unknowns – the questions you wouldn’t have thought to ask in the first place. It may be no small accident that feedback loops like this are an important control theory concept as well. But perhaps unlike traditional data analysis tasks, not just any question drives our inquiry – observability is focused on answering operational questions, those related to system behavior and functioning, which can be answered by measuring specific quantitative outputs.

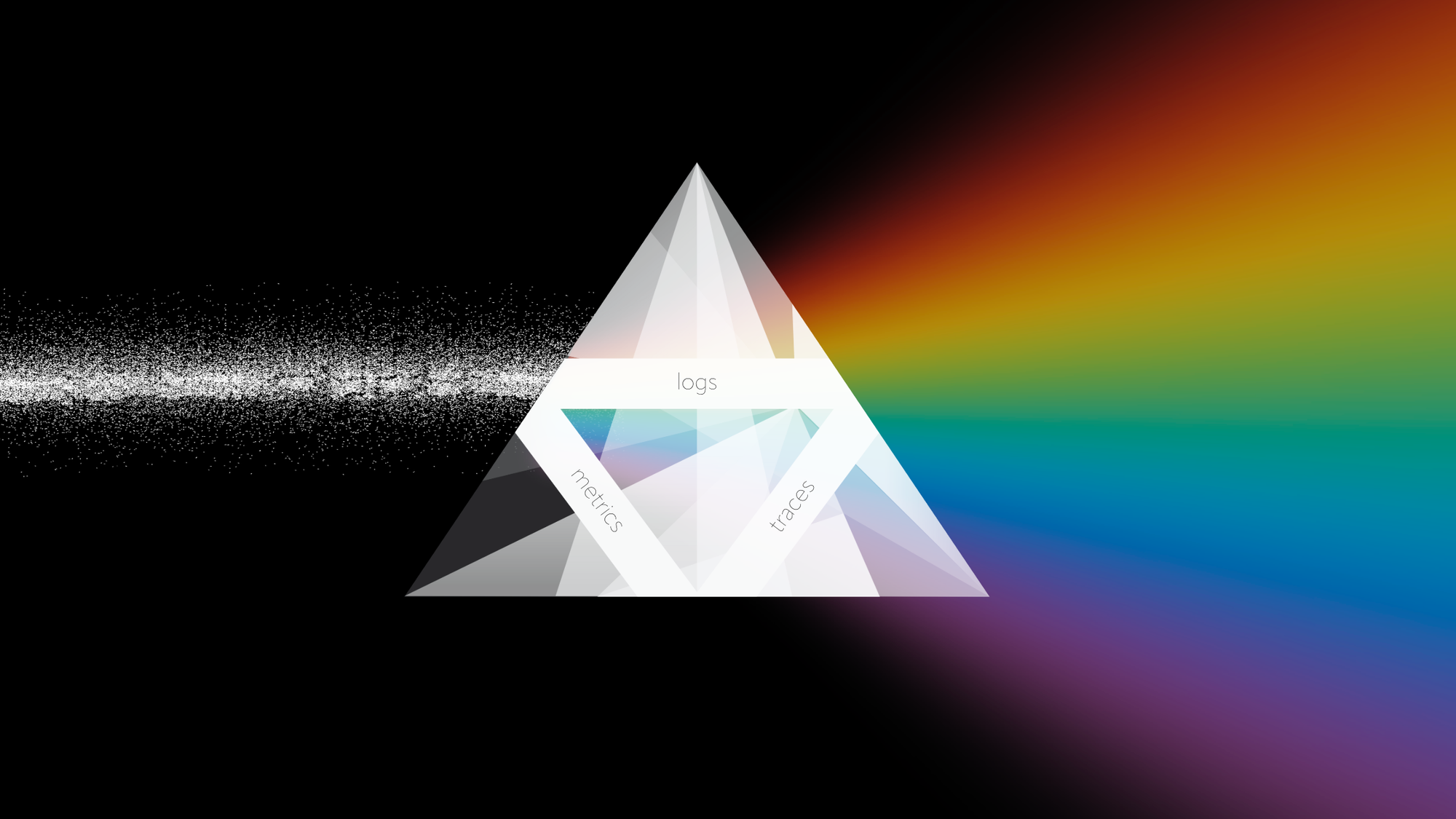

The data (logs, metrics, and traces) and tools that we use to answer (time series databases, log analysis platforms, and visualization solutions) are specifically tuned to solve this type of problem efficiently. And with good reason – in the observability domain, we often need to derive insight from data quickly and under less-than-ideal circumstances, so the tools must allow us to navigate (and discard) vast quantities of data with speed and agility. It may be useful to think of these platforms like a prism, allowing you, the observer, to manipulate a signal and see it from different angles, in different colors, in a new light.

To decide which set of lenses to use, you might ask yourself the following kinds of questions:

- What timescale is relevant? Short- or long-term? Am I investigating an event that just occurred or am I reviewing longitudinal trends?

- How familiar am I with the data? Have I been previously exposed to it? Do I understand the edge cases and what “normal” looks like?

- How soon do I need an answer? Seconds? Hours? Days?

- Why am I asking? Am I…reviewing a triggered alarm, identifying prospective problem areas as part of a system design review, reporting on a new feature’s usage and performance?

Better understanding these factors may help guide which telemetry types (logs, metrics, or traces) and analytic approaches will be most useful in getting the necessary answers. For example, logs are context-rich but can be slow to analyze and retention limits are often in place, affecting how far back data can be queried ad-hoc. Metrics are fast and easy to work with, especially for mathematical calculations, but are best-suited for aggregate use cases, as cardinality limitations often prevent more fine-grained analysis.

An SRE who has been paged (possibly while sleeping) about a system malfunction needs this ability to quickly assess the current system state and understand (possibly while still waking up) what corrective actions can be applied safely. But so does the application engineer tasked with refactoring a slow endpoint as part of planned work. While the tools they use may be the same, the observability lens through which these users view the data to find their answers can change based on the questions being asked, the reason for asking, the intended audience, and how quickly an answer is needed.

Anyone responsible for the ongoing development, maintenance, and overall sustainability of a system should feel empowered to ask questions and make use of observability platforms to find their answers – not just someone with “engineer” in their job title. Incident managers, product managers, designers, QA testers, and engineering leadership can benefit from investing in a deeper understanding of how the systems they are responsible for actually behave in the real world. And although working with observability data and tools might feel unfamiliar to some at first, these are skills that can (and should!) be honed like any other: with training and practice.

But for the curious, surprising insights can be found anywhere simply by asking the right questions.